Last Updated on May 27, 2024 by Arnav Sharma

Microsoft Azure Event Hubs is a powerful tool that enables businesses to ingest, process, and store millions of events per second. This cloud-based service is designed to handle large amounts of data streams in real time, making it an essential tool for any business that wants to collect and analyze data at scale. With Azure Event Hubs, businesses can take advantage of a scalable event processing engine that can handle millions of events per second while also being able to store that data in the cloud for future analysis.

Introduction to Microsoft Azure Event Hubs and its Importance

Microsoft Azure Event Hubs acts as a scalable event ingestion service, capable of handling millions of events per second, making it an essential component in the architecture of modern data-driven applications.

The importance of Microsoft Azure Event Hubs lies in its ability to seamlessly integrate with other Azure services, such as Azure Functions, Azure Stream Analytics, and Azure Logic Apps, enabling businesses to build robust and scalable event-driven architectures. By capturing and processing events in real-time, organizations can gain immediate insights, make data-driven decisions, and take proactive actions.

Furthermore, Azure Event Hubs offers features like automatic scaling, geo-replication, and built-in security, ensuring high availability, data durability, and compliance with industry standards. This makes it an ideal choice for businesses operating in various sectors, including healthcare, finance, retail, and IoT, where real-time data processing and analysis are crucial for driving innovation and maintaining a competitive edge.

Understanding the basics of Azure Event Hubs

One of the key features that sets Azure Event Hubs apart is its ability to handle high-throughput ingress of events at an incredible scale. It offers a partitioned consumer model, allowing multiple consumers to process event data simultaneously, resulting in enhanced efficiency and scalability. This partitioning mechanism ensures that each consumer group only receives a specific subset of the event stream, enabling parallel processing and seamless scalability.

Azure Event Hubs also provides fault tolerance and reliability through its data replication and redundancy capabilities. It replicates events across multiple Azure data centers, ensuring that no data is lost even in the event of failures or outages. Additionally, it supports automatic load balancing, enabling event distribution across partitions to maintain optimal performance.

Furthermore, Azure Event Hubs seamlessly integrates with other Azure services and tools, making it a versatile choice for building robust and real-time data processing solutions. It can be easily combined with Azure Functions, Azure Stream Analytics, Azure Logic Apps, and more, allowing developers to create comprehensive event-driven architectures.

Key features and benefits

Microsoft Azure Event Hubs offers a wide range of key features and benefits that can greatly enhance your data streaming and event processing capabilities.

1. Scalability: One of the standout features of Azure Event Hubs is its ability to handle massive amounts of data and scale effortlessly to accommodate high volumes of events. Whether you are dealing with millions or billions of events per day, Event Hubs can seamlessly handle the load, ensuring smooth data ingestion and processing.

2. Real-time event streaming: Event Hubs empowers you to capture and process events in real-time, enabling you to make faster and more informed business decisions. With low latency and high throughput, you can react to events as they occur, unlocking the potential for real-time analytics, monitoring, and alerting.

3. Seamless integration: Azure Event Hubs seamlessly integrates with other Azure services, enabling you to leverage a comprehensive ecosystem for your data processing needs. Whether you want to use Azure Functions for serverless event-driven computing, Azure Stream Analytics for real-time analytics, or Azure Logic Apps for workflow automation, Event Hubs provides a smooth integration experience.

4. Data retention: Event Hubs allows you to retain event data for a specified period, providing the flexibility to review and analyze historical events. This feature is particularly valuable for auditing, compliance, and troubleshooting purposes, giving you a complete picture of your event data over time.

5. Security and reliability: Microsoft Azure Event Hubs prioritizes the security and reliability of your event data. It offers built-in features such as access control policies, authentication mechanisms, and data encryption to ensure the confidentiality and integrity of your data. Additionally, Event Hubs provides high availability and fault tolerance, with data replicated across multiple Azure data centers for maximum reliability.

6. Easy integration with existing systems: Event Hubs supports multiple protocols and APIs, allowing you to seamlessly integrate with your existing systems and applications. Whether you prefer using AMQP, HTTP, or Apache Kafka, Event Hubs offers flexible options for sending and receiving events, enabling you to work with the tools and technologies you are already familiar with.

Use cases for Event Hubs

Microsoft Azure Event Hubs is a powerful and versatile service that offers a wide range of use cases for businesses across various industries. Whether you are in retail, finance, healthcare, or any other sector, Event Hubs can be leveraged to enhance your data streaming and real-time analytics capabilities.

1. Internet of Things (IoT) Applications:

Event Hubs is an excellent choice for handling massive volumes of data generated by IoT devices. With its ability to ingest and process millions of events per second, it enables businesses to collect and analyze data from sensors, devices, and machines in real-time. This can be invaluable in optimizing operations, monitoring equipment health, and predicting maintenance needs.

2. Log and Event Data Collection:

Event Hubs can act as a centralized hub for collecting logs and event data from various sources such as applications, servers, and virtual machines. By streaming this data into Event Hubs, businesses can easily analyze and gain insights into system performance, application behavior, and security incidents. It simplifies log aggregation and provides a scalable solution for managing large volumes of data.

3. Real-time Analytics and Machine Learning:

Event Hubs seamlessly integrates with other Azure services such as Azure Stream Analytics and Azure Functions, enabling real-time analytics and machine learning capabilities. By processing and analyzing incoming events in real-time, businesses can derive valuable insights, make data-driven decisions, and trigger automated actions based on predefined rules or machine learning models.

4. Clickstream and User Behavior Analysis:

For e-commerce or digital marketing companies, Event Hubs can capture and analyze clickstream data, user interactions, and behavior patterns. By tracking and processing this data in real-time, businesses can gain valuable insights into customer preferences, personalize user experiences, and optimize marketing campaigns for better conversion rates.

5. Event-driven Microservices Architecture:

Event Hubs can serve as a backbone for building event-driven architectures, allowing different microservices and components to communicate and exchange data asynchronously. This enables decoupled and scalable systems, where each microservice can react to events and trigger actions accordingly. It promotes agility, scalability, and fault tolerance in modern application development.

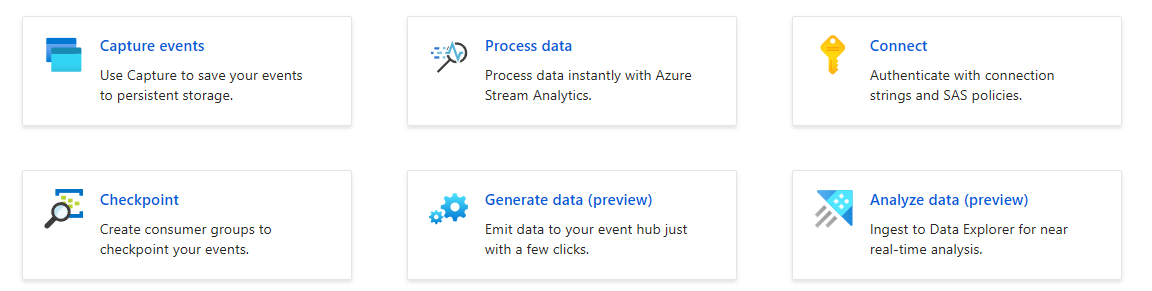

Getting started with Azure Event Hubs

Within your Event Hubs namespace, you can create one or more Event Hubs within it. Event Hubs act as the entry point for your streaming data, allowing you to partition and distribute it for efficient processing. When creating an Event Hub, you can specify the number of partitions based on your anticipated data load and processing requirements.

Next, you’ll need to consider authentication and security. Azure Event Hubs offers several authentication options, including shared access signatures (SAS) and Azure Active Directory (Azure AD) authentication. SAS provides a secure way to authenticate and authorize access to your Event Hubs, while Azure AD authentication integrates seamlessly with your existing Azure AD infrastructure.

Once you have your Event Hubs set up and secured, you can start sending data to it. Azure Event Hubs supports multiple protocols and programming languages, giving you the flexibility to choose the one that best suits your needs. Whether you prefer using the .NET framework, Java, Python, or any other supported language, Azure Event Hubs provides client libraries and SDKs to simplify the process of sending events.

To consume the streaming data from Event Hubs, you can utilize various options such as Azure Functions, Azure Stream Analytics, or custom-built applications. These tools and frameworks enable you to process and analyze incoming events in real time, unlocking valuable insights and enabling rapid decision-making.

Sending and receiving events with Azure Event Hubs

Sending and receiving events with Azure Event Hubs is a fundamental aspect of leveraging the power of this robust platform. Whether you are working with real-time data streaming, building event-driven applications, or implementing IoT solutions, Event Hubs provides the necessary infrastructure to handle massive amounts of events with ease.

To send events to Azure Event Hubs, you first need to create an Event Hub namespace and an Event Hub within it. This can be done through the Azure portal or programmatically using the Azure SDKs or REST APIs. Once your Event Hub is set up, you can start sending events from various sources such as applications, devices, or other services.

Event publishers can use the Event Hubs client libraries provided by Microsoft to establish a connection and send events using a sender or producer. These libraries are available for popular programming languages like .NET, Java, Python, and more. With just a few lines of code, you can serialize your data into an event and send it to the Event Hub.

On the receiving end, you can utilize Event Hubs’ consumer groups to enable multiple applications or components to independently access the event stream. Consumer groups allow for parallel processing and provide the ability to scale your application horizontally. Each consumer within a group can read events from a specific partition, ensuring efficient and distributed processing.

To receive events, you can use the Event Hubs client libraries’ receiver or consumer functionality. The libraries handle the necessary communication with the Event Hubs service, allowing you to focus on processing the incoming events. You can choose to receive events in real-time or in batches, depending on your application requirements.

Additionally, Azure Event Hubs integrates seamlessly with other Azure services like Azure Functions, Azure Stream Analytics, and Azure Logic Apps. This enables you to build powerful event-driven architectures and leverage the full capabilities of the Azure ecosystem.

a. Sending events with Event Producer Clients (code examples)

Sending events with Event Producer Clients is a fundamental aspect of utilizing the power of Microsoft Azure Event Hubs. With the Event Producer Clients, you can easily send data from various sources to Event Hubs, enabling real-time event processing and analysis.

To get started, you will need to choose a suitable Event Producer Client based on your programming language or framework of choice. Microsoft Azure provides Event Producer Clients for a wide range of languages including C#, Java, Python, and even Node.js.

Let’s take a look at a code example using the Event Producer Client in C#:

```csharp

using Azure.Messaging.EventHubs;

using System;

class Program

{

static async Task Main()

{

string connectionString = "<>";

string eventHubName = "<>";

await using (var producerClient = new EventHubProducerClient(connectionString, eventHubName))

{

using EventDataBatch eventBatch = await producerClient.CreateBatchAsync();

// Add your events to the batch

EventData eventData = new EventData(Encoding.UTF8.GetBytes("Event 1"));

eventBatch.TryAdd(eventData);

EventData eventData2 = new EventData(Encoding.UTF8.GetBytes("Event 2"));

eventBatch.TryAdd(eventData2);

// Send the batch of events to the Event Hub

await producerClient.SendAsync(eventBatch);

}

Console.WriteLine("Events sent successfully.");

}

}

```This code snippet demonstrates the basic process of sending events to the Event Hub. You will need to replace `<>` with your actual Event Hub connection string and `<>` with the name of your Event Hub.

By using the Event Producer Client, you have the flexibility to send multiple events in batches for efficient transmission. Additionally, you can customize each event with specific metadata or properties to enhance event processing on the receiving end.

b. Receiving events with Event Consumer Clients (code examples)

Receiving events with Event Consumer Clients is a crucial aspect of harnessing the power of Microsoft Azure Event Hubs. With the ability to process and analyze large volumes of data in real-time, Event Hubs offer a scalable and efficient solution for event streaming and processing.

To get started with receiving events, you will need to use Event Consumer Clients, which are available in multiple programming languages, including .NET, Java, and Python. These client libraries provide the necessary tools and functionalities to connect to Event Hubs and process incoming events seamlessly.

Let’s take a look at some code examples to understand how to receive events using Event Consumer Clients.

1. .NET Example:

```

using Azure.Messaging.EventHubs;

using Azure.Messaging.EventHubs.Consumer;

string connectionString = "your_event_hub_connection_string";

string eventHubName = "your_event_hub_name";

string consumerGroup = "your_consumer_group_name";

await using (var consumer = new EventHubConsumerClient(consumerGroup, connectionString, eventHubName))

{

await foreach (PartitionEvent partitionEvent in consumer.ReadEventsAsync())

{

// Process the received event

Console.WriteLine($"Received event: {Encoding.UTF8.GetString(partitionEvent.Data.Body.ToArray())}");

}

}

```2. Java Example:

```java

import com.azure.messaging.eventhubs.*;

String connectionString = "your_event_hub_connection_string";

String eventHubName = "your_event_hub_name";

String consumerGroup = "your_consumer_group_name";

EventProcessorClient eventProcessorClient = new EventProcessorClientBuilder()

.connectionString(connectionString, eventHubName)

.consumerGroup(consumerGroup)

.processEvent(eventContext -> {

// Process the received event

System.out.println("Received event: " + new String(eventContext.getEventData().getBody()));

})

.processError(errorContext -> {

// Handle any error that occurs during event processing

System.err.println("Error occurred: " + errorContext.getThrowable());

})

.buildEventProcessorClient();

eventProcessorClient.start();

```3. Python Example:

```python

from azure.eventhub import EventHubConsumerClient

connection_string = "your_event_hub_connection_string"

event_hub_name = "your_event_hub_name"

consumer_group = "your_consumer_group_name"

client = EventHubConsumerClient.from_connection_string(connection_string, consumer_group, event_hub_name)

def on_event(partition_context, event):

# Process the received event

print(f"Received event: {event.body_as_str()}")

client.receive(on_event=on_event)

```These code examples demonstrate the basic setup and usage of Event Consumer Clients in different programming languages. By leveraging these clients, you can easily receive and process events from Azure Event Hubs, enabling you to unlock the full potential of real-time data streaming and analysis.

Understanding partitions and message ordering

In Event Hubs, a partition is a unit of storage and parallelism. It represents a “chunk” of the event stream, allowing for concurrent processing and scalability. When you create an Event Hub, you can specify the number of partitions based on your workload requirements.

One important aspect of partitions is message ordering. Each partition maintains its own sequence of events, ensuring that messages within the same partition are delivered in the order they were sent. However, it’s important to note that messages sent to different partitions may be processed out of order. This is by design to achieve high throughput and scalability.

To take full advantage of message ordering, you need to carefully consider your application’s requirements. If maintaining strict message order is crucial, you may choose to send all related events to the same partition. This way, you ensure that the processing of those events happens sequentially. However, this approach may limit scalability as all events are processed by a single partition.

On the other hand, if message order is not critical or you need high throughput, distributing events across multiple partitions can significantly improve performance. By leveraging the power of parallel processing, you can handle a larger volume of events concurrently.

It’s important to note that Event Hubs provides mechanisms to retrieve events in the order they were sent, regardless of partitioning. For example, you can use sequence numbers or event offsets to track the order of events within a partition or across multiple partitions.

Scaling and managing Azure Event Hubs

Scaling and managing Azure Event Hubs is crucial to ensure optimal performance and reliability for your event-driven applications. With the ability to handle massive volumes of data, Azure Event Hubs offers a scalable solution for ingesting, processing, and storing events in real-time.

One of the key benefits of Azure Event Hubs is its ability to scale effortlessly based on the demands of your application. As the number of events increases, Event Hubs can dynamically allocate more resources to handle the load, ensuring high throughput and low latency. This scalability is achieved through the use of partitions, which allow you to distribute the incoming events across multiple storage units. By distributing the workload, Event Hubs can handle a significantly higher volume of events without compromising performance.

Managing Azure Event Hubs involves monitoring the health and performance of your event processing pipeline. Azure provides various tools and services to help you gain insights into the behavior of your Event Hubs. Azure Monitor allows you to track important metrics such as incoming and outgoing events, processing latency, and resource utilization. By analyzing these metrics, you can identify bottlenecks, optimize your event processing logic, and ensure the efficient utilization of resources.

In addition to monitoring, Azure Event Hubs provides features for managing the lifecycle of your events. You can set retention policies to determine how long events should be stored in Event Hubs, enabling you to meet compliance and regulatory requirements. Event Hubs also supports checkpoints, which allow you to keep track of the last event successfully processed by your application. This ensures that in the event of a failure or restart, your application can resume processing from where it left off, without duplicating events.

To further enhance the manageability of Azure Event Hubs, Azure provides integration with other services. For example, you can leverage Azure Logic Apps or Azure Functions to implement automated workflows and trigger actions based on the events received by Event Hubs. This enables you to build event-driven architectures and automate processes based on real-time data.

Best practices for performance and reliability

When it comes to utilizing Microsoft Azure Event Hubs for your applications, implementing best practices for performance and reliability is crucial. Event Hubs is designed to handle massive amounts of data and provide real-time streaming capabilities, but without careful consideration, you may encounter performance issues or data loss.

First and foremost, it is important to monitor the performance of your Event Hubs. Azure provides various monitoring tools and metrics that can give you insights into the throughput, latency, and incoming data rates. By regularly monitoring these metrics, you can identify any potential bottlenecks or issues and take proactive measures to optimize performance.

Another best practice is to partition your Event Hubs effectively. Partitioning allows you to scale your event processing and maintain high throughput. It is recommended to evenly distribute the workload across multiple partitions, ensuring that no single partition becomes a performance bottleneck. Additionally, consider the size and number of partitions based on the expected data volume and velocity to achieve optimal performance.

To ensure reliability, it is crucial to have a robust error handling and retry mechanism in place. Event Hubs provides features like dead-lettering, which allows you to handle and store failed events separately for later analysis. Implementing retries with exponential backoff logic can help in recovering from transient errors and ensuring data integrity.

Furthermore, consider using checkpoints to track the progress of event processing. By regularly checkpointing, you can easily resume processing from where it left off in case of failures or restarts. This helps in maintaining data consistency and avoiding duplicate processing.

Lastly, it is important to secure your Event Hubs to protect your data and prevent unauthorized access. Utilize Azure Active Directory for authentication and authorization, enable encryption at rest and in transit, and implement appropriate access control policies.

Integrating – Azure Functions and Event Hubs

Azure Functions and Event Hubs are two powerful components of Microsoft Azure that can work together to create a seamless and efficient data processing pipeline. Azure Functions is a serverless compute service that allows you to run code in the cloud without having to worry about infrastructure management. On the other hand, Azure Event Hubs is a fully managed, real-time data ingestion service that can handle millions of events per second.

When combined, Azure Functions and Event Hubs enable you to build event-driven architectures that can process and analyze large volumes of data in real-time. With Azure Functions, you can write small, focused pieces of code that perform specific tasks whenever an event is received from Event Hubs. This event-driven approach allows you to process and react to events in near real-time, making it ideal for scenarios such as IoT data processing, log analysis, and real-time analytics.

One of the key advantages of using Azure Functions with Event Hubs is the ability to scale seamlessly. Azure Functions automatically scales based on the incoming event load, ensuring that your processing code can handle any volume of events without interruption. This elasticity is crucial when dealing with high-velocity event streams, where the incoming data rate can vary significantly.

Another benefit of using Azure Functions and Event Hubs together is the ease of integration. Azure Functions can be easily triggered by events from Event Hubs, allowing you to focus on writing the processing logic rather than managing the event ingestion pipeline. Additionally, Azure Functions supports multiple programming languages, including C#, JavaScript, Python, and PowerShell, giving you the flexibility to use your preferred language for writing your event processing code.

Integrating – Azure Stream Analytics and Event Hubs

Azure Stream Analytics and Event Hubs are two powerful components of Microsoft Azure that work hand in hand to unlock the full potential of real-time data processing and analysis.

Azure Stream Analytics allows you to process large volumes of streaming data in real-time, making it ideal for scenarios where near-instantaneous insights are required. With its simple yet powerful SQL-like language, you can easily define queries to transform, filter, and aggregate the incoming data streams. This enables you to extract meaningful information and gain valuable insights from the continuous flow of data.

Event Hubs, on the other hand, acts as a scalable event ingestion service that can handle massive amounts of data ingress from various sources. It serves as a highly reliable and scalable entry point for ingesting data streams into Azure, allowing you to collect and store data from a wide range of devices, systems, and applications.

When combined, Azure Stream Analytics and Event Hubs provide a comprehensive solution for real-time data processing and analysis. You can configure Azure Stream Analytics to consume data from Event Hubs, enabling you to perform real-time analytics and derive actionable insights from the incoming data streams.

This integration opens up a world of possibilities for businesses across various industries. For example, in IoT (Internet of Things) scenarios, you can use Azure Stream Analytics and Event Hubs to process and analyze sensor data in real-time, enabling you to monitor and respond to critical events as they occur. In the financial sector, you can leverage this powerful combination to perform real-time fraud detection and risk analysis, helping you safeguard your organization against potential threats.

Integrating – Azure Logic Apps and Event Hubs

Azure Logic Apps and Event Hubs are a match made in heaven when it comes to unlocking the full power of Microsoft Azure. Logic Apps provide a visual and intuitive way to build workflows and automate business processes, while Event Hubs enable the ingestion, processing, and analysis of streaming data at scale.

By leveraging Azure Logic Apps with Event Hubs, you can seamlessly integrate your applications and services to respond to events in real-time. Whether it’s processing incoming data, triggering actions based on specific conditions, or orchestrating complex workflows, this combination offers endless possibilities.

One of the key advantages of using Logic Apps with Event Hubs is the flexibility and scalability it provides. Logic Apps allow you to easily connect to various data sources and services, including Event Hubs, without having to write complex code. With a wide range of connectors available, you can effortlessly integrate with popular applications, databases, and APIs.

When it comes to Event Hubs, it acts as a highly scalable and reliable event streaming platform that can handle massive volumes of data in real-time. It allows you to collect data from multiple sources, such as IoT devices, social media feeds, or application logs, and process it in near real-time. Event Hubs also supports event publishing and subscription patterns, making it ideal for building event-driven architectures.

With the combined power of Azure Logic Apps and Event Hubs, you can build robust and scalable solutions that are capable of handling complex event processing scenarios. Whether you need to trigger actions based on specific events, perform real-time analytics on streaming data, or integrate multiple systems together, this integration provides a comprehensive solution.

Securing and implementing data retention with Azure Event Hubs

Securing and implementing data retention with Azure Event Hubs is a crucial aspect of utilizing this powerful technology. As data flows through the Event Hubs, it is essential to ensure the security and privacy of sensitive information, as well as to comply with regulatory requirements.

One way to enhance security is by implementing access control through Azure Active Directory (AAD) integration. By leveraging AAD, you can define granular permissions and roles, granting access only to authorized individuals or applications. This helps protect your data from unauthorized access and reduces the risk of data breaches.

Another important consideration is data retention. Azure Event Hubs allows you to configure the retention period for your event data. You can choose a retention duration that aligns with your business requirements and compliance policies. This ensures that your data is retained for the necessary time period, enabling you to perform analysis, troubleshoot issues, and meet any legal or regulatory obligations.

To further enhance data security, you can implement encryption both at rest and in transit. Azure Event Hubs supports Transport Layer Security (TLS) to encrypt data transmission between clients and the Event Hubs service. Additionally, you can leverage Azure Storage Service Encryption (SSE) to encrypt the data stored in the Event Hubs.

Monitoring and auditing are also crucial for maintaining data security. Azure Monitor provides comprehensive monitoring capabilities, allowing you to track the performance and health of your Event Hubs. It enables you to set up alerts for critical events and anomalies, ensuring that you can respond promptly to any potential security issues.

FAQ – Event Hubs

Q: What is Microsoft Azure Event Hubs?

A: Microsoft Azure Event Hubs is a scalable event processing service that can ingest and process millions of events per second.

Q: How can I create an Event Hub?

A: To create an Event Hub, you can use the Azure Portal. Simply navigate to your Azure portal, select your desired resource group, and create a new Event Hubs namespace. After creating the namespace, you can then create an Event Hub within that namespace.

Q: What is an Event Hubs namespace?

A: An Event Hubs namespace is a container that holds one or more Event Hubs. It provides a scoping boundary for all the Event Hubs in it.

Q: How can I use Event Hubs in my Azure services?

A: Event Hubs can be used as a streaming service to process and store large volumes of events. It can be integrated with other Azure services, such as Azure Data Factory or Azure Functions, to build an end-to-end data pipeline.

Q: What is the difference between Event Hubs and Azure Event Grid?

A: Event Hubs is a scalable event processing service for ingesting and processing large volumes of events, while Azure Event Grid is a routing service for subscribing and reacting to events from various Azure resources.

Q: Can Event Hubs ingest events from an Apache Kafka application?

A: Yes, Event Hubs provides a Kafka-compatible endpoint that allows you to use Event Hubs with Kafka applications.

Q: How can I ingest millions of events per second into Event Hubs?

A: Event Hubs can handle massive event ingestion through the use of partitions and throughput units. By increasing the number of partitions and throughput units, you can scale the ingestion capabilities of Event Hubs.

Q: Can I choose the number of partitions for my Event Hub?

A: Yes, when creating an Event Hub, you can specify the number of partitions based on your application’s requirements.

Q: How can I process the data ingested into Event Hubs?

A: Once the data is ingested into Event Hubs, you can process it using various Azure services like Azure Functions, Azure Stream Analytics, or Azure Data Lake Storage.

Q: Can Event Hubs store the events for later processing?

A: Yes, Event Hubs offers a feature called Event Hubs Capture, which allows you to automatically capture the events and store them in Azure Blob Storage or Azure Data Lake Storage for long-term processing or analysis.

keywords: big data microsoft learn event hub using iot hub events from an event hub using the azure process data azure stack hub learn how to use event hub partition to event hubs to ingest azure cli services inside and outside azure