Last Updated on September 26, 2024 by Arnav Sharma

In today’s digital era, managing AI usage effectively while maintaining compliance and data security is crucial for organizations. Microsoft Purview AI Hub and Microsoft 365 Copilot offer great solutions that integrate AI technologies with enterprise data governance. This blog explores how these tools work together, licensing requirements, and essential considerations for their deployment.

What is Microsoft Purview AI Hub?

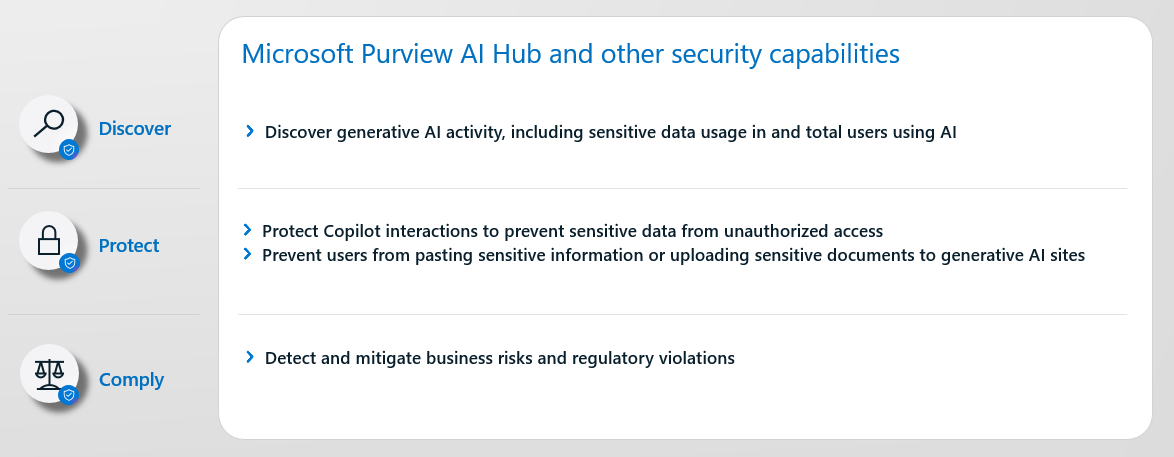

Microsoft Purview AI Hub is a unified platform designed to manage, monitor, and secure the use of AI technologies in an organization. It integrates compliance and governance capabilities to safeguard sensitive data, ensuring that AI tools like Microsoft 365 Copilot, and third-party AI apps such as ChatGPT, are used securely.

Features:

- Data Security Policies: These policies enforce rules around who can access data and how it is used within AI systems. For example, administrators can set data loss prevention (DLP) policies that restrict users from copying or sending sensitive data via AI tools.

- AI Activity Insights: The AI Hub provides analytics that show how AI interacts with organizational data. This could involve tracking which documents, emails, or data sources are accessed and used by AI systems like Copilot.

- Compliance Controls: Organizations can enforce governance measures like sensitivity labels, which classify and protect data based on its content. These controls ensure that AI apps only access data they are permitted to use, complying with regulations such as GDPR or HIPAA.

What is Microsoft 365 Copilot?

Microsoft 365 Copilot is an AI assistant integrated within Microsoft Office apps (Word, Excel, PowerPoint, Outlook, etc.) to boost productivity and enhance creativity. By leveraging large language models (LLMs), Copilot helps users with tasks such as drafting documents, analyzing data, summarizing emails, and more.

Detailed Features:

- Copilot in Word: Assists in document creation by generating summaries, drafting text, or refining existing content. It can reference multiple documents to enrich the output and provide visual elements like tables.

- Copilot in Excel: Helps users analyze and interpret data by generating insights, suggesting trends, and automating data manipulation tasks like filtering or sorting.

- Copilot in Outlook: Speeds up communication by drafting emails, summarizing long email threads, and suggesting actions like follow-up meetings. Users can also customize the tone and length of their emails.

- Copilot in Teams: Summarizes key points from meetings or chats, organizes action items, and helps users catch up on missed discussions by providing clear, concise summaries.

- Copilot in PowerPoint: Transforms written content into presentations, generating slides, speaker notes, and visual elements from simple text prompts.

Copilot Licensing Needed for Microsoft Purview AI Hub

To use Microsoft Purview AI Hub in combination with Microsoft 365 Copilot, organizations must fulfill specific licensing requirements. These ensure that both the AI and governance features work together seamlessly.

Licensing Prerequisites:

- Microsoft 365 E3/E5 Plans: The core plans (E3 for basic features, E5 for advanced) provide essential tools like data security, auditing, and compliance capabilities that integrate with Copilot.

- Microsoft 365 Copilot Add-On: Copilot functionality is not included by default. Organizations must purchase a Copilot add-on license to activate AI features within Microsoft 365 applications.

- Microsoft 365 E5 Compliance: For organizations requiring advanced compliance features like eDiscovery, auditing, and data classification for AI interactions, the E5 Compliance license is essential. It provides tools to manage, investigate, and audit AI usage.

Microsoft Purview Strengthens Information Protection for Copilot

Microsoft Purview plays a key role in ensuring that the use of Copilot adheres to organizational security and compliance standards. It integrates with Copilot to protect sensitive information through mechanisms like encryption and sensitivity labels.

How It Works:

- Sensitivity Labels: These are metadata tags that classify data based on its sensitivity (e.g., “Confidential”, “Restricted”). When Copilot interacts with files labeled as sensitive, it adheres to these labels, restricting access to authorized users only.

- Data Encryption: Purview ensures that Copilot respects encryption rules. For example, if a file is encrypted and does not provide “EXTRACT” rights, Copilot cannot pull data from it, even if it can be viewed by the user.

- Label Inheritance: When Copilot creates new content based on a labeled document, the new content automatically inherits the original sensitivity label. This ensures that the same level of data protection is applied to the new content, maintaining security and compliance.

Microsoft Purview Compliance Management for Copilot

Microsoft Purview helps organizations maintain compliance by offering tools to manage and monitor how Copilot interacts with data. These tools are especially useful for industries with stringent regulatory requirements (like finance or healthcare) where monitoring AI activity is crucial.

Compliance Features:

- Audit Logs: Every Copilot interaction is logged for audit purposes, enabling organizations to review which data was accessed, how it was used, and what actions were taken. This helps organizations ensure that AI usage is compliant with internal and external regulations.

- eDiscovery: If a legal case requires access to specific Copilot interactions (like email threads or document generation), eDiscovery tools allow organizations to search for and retrieve relevant Copilot data. This is particularly useful for litigation or regulatory audits.

- Retention Policies: Organizations can define policies that govern how long Copilot interactions, such as generated documents or chat conversations, are retained. This ensures that critical data is preserved for compliance purposes and can be deleted when no longer needed.

Microsoft Purview AI Hub Prerequisites and Considerations

Before deploying Microsoft Purview AI Hub, certain prerequisites and planning considerations need to be addressed to ensure smooth integration and operation.

Key Prerequisites:

- Licensing: Ensure all users interacting with Copilot and the AI Hub have the necessary Microsoft 365 licenses (E3, E5, or Copilot add-ons).

- Permissions: Assign the correct roles within Microsoft Purview and Microsoft Entra ID (formerly Azure AD) to security, compliance, and admin teams. Roles like Compliance Administrator, Security Reader, or Global Administrator allow authorized users to manage AI activities and access reports.

- Onboarding Devices: To monitor third-party AI apps (like ChatGPT), devices must be onboarded to Microsoft Purview. Additionally, users need the Microsoft Purview browser extension to track AI-related activities in browsers.

- Endpoint DLP Policies: Organizations should set up Endpoint Data Loss Prevention (DLP) policies that can monitor and control the sharing of sensitive information with unauthorized AI apps. For example, if a user attempts to paste a credit card number into a third-party AI tool, DLP policies can block or warn them.

Information Protection Considerations for Copilot

Data security is paramount when deploying Microsoft 365 Copilot. Organizations need to ensure that sensitive information is protected throughout the Copilot workflow, especially when it involves generating new content based on existing data.

Key Considerations:

- Sensitive Data Access: Copilot follows existing sensitivity labels and encryption settings. If a document is labeled as confidential and only grants viewing rights (but not extraction), Copilot will not be able to summarize or pull data from it.

- Label Inheritance: When Copilot creates new content based on a labeled source, the sensitivity label is automatically inherited. For instance, if a confidential document is used to generate a PowerPoint presentation, the new presentation will also be labeled as confidential.

- Encryption Restrictions: Copilot will only be able to access encrypted content if it’s open and actively in use. For unopened encrypted documents, Copilot cannot retrieve or use the data, ensuring additional layers of protection.

Compliance Management Considerations for Copilot

To ensure regulatory compliance, organizations need to carefully plan how they use Microsoft 365 Copilot. This involves setting up governance measures that track, monitor, and regulate AI interactions.

Key Considerations:

- Auditability: Every user interaction with Copilot is captured in audit logs. This includes prompts, responses, and data interactions, allowing organizations to trace and verify AI usage for security and compliance audits.

- Retention Policies: Establish policies for how long Copilot-generated content is stored. This is crucial for industries with strict data retention rules, such as financial services, where certain records must be kept for a specified duration.

- Content Discovery: Use eDiscovery tools to search, hold, and export Copilot interactions during legal investigations or regulatory reviews. This ensures that organizations can produce relevant records when required by legal authorities.

FAQ:

Q: What is Microsoft Copilot, and how does it work with Microsoft 365?

A: Microsoft 365 Copilot integrates AI into Microsoft apps, helping users create new content, access sensitive data securely, and ensure compliance protections for Microsoft 365. It assists in generating documents, presentations, and other outputs while adhering to data security policies.

Q: How does Microsoft Purview AI Hub provide security for AI applications?

A: Microsoft Purview AI Hub provides data security and compliance protections for AI applications. It helps manage sensitive data, ensuring that AI-driven tasks adhere to regulations and mitigate risks associated with AI usage.

Q: What are the benefits of using generative AI in Microsoft products?

A: Generative AI apps in Microsoft products, like Copilot, allow for efficient content creation while safeguarding data security. Compliance protections for Microsoft 365 and third-party generative AI sites ensure that sensitive data referenced in AI outputs is handled safely.

Q: What is Microsoft Purview’s role in ensuring data security and compliance for AI?

A: Microsoft Purview manages and fortifies data security by offering compliance protections for AI usage, including generative AI. It ensures that sensitive data is protected, monitored, and managed according to regulatory standards.

Q: How does Microsoft Purview Portal help manage AI data security?

A: The Microsoft Purview Portal allows users to manage and monitor AI data security, including sensitive data referenced in AI interactions. It provides tools to secure data for AI and supports compliance requirements for Microsoft 365 tenant environments.

Q: What are the risks associated with AI usage, and how does Microsoft help mitigate them?

A: Risks associated with AI usage include potential data breaches, privacy concerns, and regulatory non-compliance. Microsoft helps mitigate these risks through tools like Purview AI Hub, which offers policies for data protection and adaptive protections in AI assistants like Copilot.

Q: How does Microsoft Copilot ensure compliance and security when generating AI content?

A: Microsoft Copilot and other generative AI tools ensure compliance protections by adhering to the guidelines set by Microsoft Purview. Copilot accesses sensitive data securely and applies policies to protect and manage data while generating new content for users.

Q: What is the Microsoft Purview Compliance Portal, and how does it enhance AI data security?

A: The Microsoft Purview Compliance Portal is a centralized platform that allows users to manage compliance and data security requirements for AI applications. It provides tools to monitor, protect, and manage data referenced in AI-generated responses and ensures adherence to AI regulations.

Q: What considerations should organizations take when deploying Microsoft Purview AI Hub?

A: When deploying Microsoft Purview AI Hub, organizations must consider data security, compliance protections, and AI security requirements. The hub provides capabilities to manage AI data, mitigate risks, and ensure that sensitive data is protected during AI interactions.

Q: How does Microsoft manage third-party AI sites and ensure data security?

A: Microsoft ensures that supported third-party AI sites follow stringent security and compliance standards. Microsoft Purview helps monitor these sites, offering adaptive protection to secure sensitive data, whether through Microsoft or third-party AI platforms.

Q: What role does Azure Rights Management Service play in securing AI data?

A: Azure Rights Management Service works with Microsoft Purview to secure data for AI applications by providing encryption and access controls. It helps fortify data security, ensuring that sensitive data referenced in AI tasks is protected from unauthorized access.